Fish Food: Episode 628

The agentic organisation, Netflix and continuous partial attention, AI prompting techniques, and the pattern of emotions in movies

This week’s provocation: The Agentic Organisation

The next wave of AI innovation is already upon us, and it's the era of agentic AI. In fact, OpenAI’s CFO Sarah Friar has already said that 'agentic' will be the word of 2025. The pace of progression has been remarkably fast from simple chatbots that were primarily designed to engage in conversation, answer questions, or guide users through a specific task, through to assistants and Generative AI tools which have taken this to a whole new (and generative) level with advanced natural language processing meaning that they can respond to multi-modal inputs, a much wider range of questions and more complex needs than a context-specific chatbot.

And now the era of the AI agent is emerging - a more advanced, potentially autonomous system that can make decisions based on the input it receives, and use tools to perform tasks. Agents can interact with multiple systems, collect data from a variety of sources, operate on a broader scope, and can take actions without continuous human guidance. The differences between agentic AI and chatbots or assistants is articulated in this BCG definition:

'AI agents are artificial intelligence that use tools to accomplish goals. AI agents have the ability to remember across tasks and changing states; they can use one or more AI models to complete tasks; and they can decide when to access internal or external systems on a user’s behalf. This enables AI agents to make decisions and take actions autonomously with minimal human oversight'.

When Google launched their latest model Gemini 2.0, Sundar Pichai described these more advanced versions of AI as ‘models that can understand more about the world around you, think multiple steps ahead and take action on your behalf, with your supervision’. Last week OpenAI launched ChatGPT scheduled tasks which allow for automated and timed execution of specific functions like sending reminders, generating reports, or performing recurring data analysis. They say that these tasks can be customised to meet deadlines or support workflow efficiency by handling routine activities at pre-set intervals. And (literally at time of writing) they have now also launched ‘Operator’, an autonomous agent (US only for now) which promises to automate tasks like shopping, booking travel and restaurants via a dedicated browser on your screen. These are more significant steps forwards to enabling a world of real AI agents.

So what happens when organisations are able to use sophisticated AI agents to perform any number of tasks and workflows? The implications of agentic AI are likely to be huge. Not just on the type of work that humans do and how they do it, but on organisational design, how strategy gets executed, skillsets that people will require, resources that businesses will need, management practices and plenty more besides.

Lee Bryant wrote some excellent thoughts setting out an expansive view on the changes this could bring. He makes the point that in spite of the world of work changing rapidly, the way in which work is coordinated and aggregated in most businesses has remained largely unchanged, and yet:

'The expected arrival of enterprise AI at scale in 2025 presents a once-in-a-generation opportunity to reinvent management and work coordination in ways that could substantially reduce operating costs, whilst making organisations more agile, adaptive and automated.'

Lee talks about how management must transition from micro-level control to governance of AI agent ecosystems - setting clear rules for autonomy, ensuring AI accountability, and enabling seamless collaboration between human and AI teams. Leaders must create a culture of trust where agents operate effectively within ethical and organisational boundaries. He uses the phrase 'programmable organisations' as a way to describe how businesses will be empowered by autonomous agents but also how there needs to be deliberate design from humans to shift from inflexible hierarchies to adaptive, network-driven frameworks that empower AI agents to take the lead in decision-making. It's a massive shift.

This agentic era is likely to emerge in stages. In the early stages vertical AI agents will become the next iteration of Software-as-a-Service (SaaS), automating a much wider range of specialised, repetitive administrative tasks across various business functions. Their focus is likely to be on specific tasks (digital marketing, customer support, quality assurance for example) where they can deliver more effective and efficient solutions, leading to significant productivity gains.

But AI agents will rapidly move beyond task automation and narrow application to become orchestrators of entire workflows and even culture within programmable organisations. We'll be able to give an agent an outcome and let the agent decide on the optimal way of achieving it rather than specifying what it needs to do. They will be able to dynamically allocate resources, optimise team structures, and even surface blind spots in organisational operations enabling companies to function with unprecedented efficiency and foresight.

Vertical AI agents that can utilise specific domain expertise and data for narrow application will be joined by horizontal agents which can handle general tasks and have much wider applicability leading to the need to orchestrate across both generalist and specialist AIs. As AI agents increasingly interact with one another on our behalf, careful management of multi-agent ecosystems will be required to ensure trust, oversight, accountability, and appropriate behaviour among autonomous agents. They can potentially act as catalysts for cultural transformation, ensuring work becomes more transparent, inclusive, and outcome-driven. We may even see 'autonomous organisations' where AI systems handle all operational aspects.

The potential for all this to go awry is huge. Which is why we need careful consideration and deliberate design around implications for staffing, strategic risk, but also the balance of how humans work with AI agents. The worst option is where we simply fall into a revolutionary change almost without noticing.

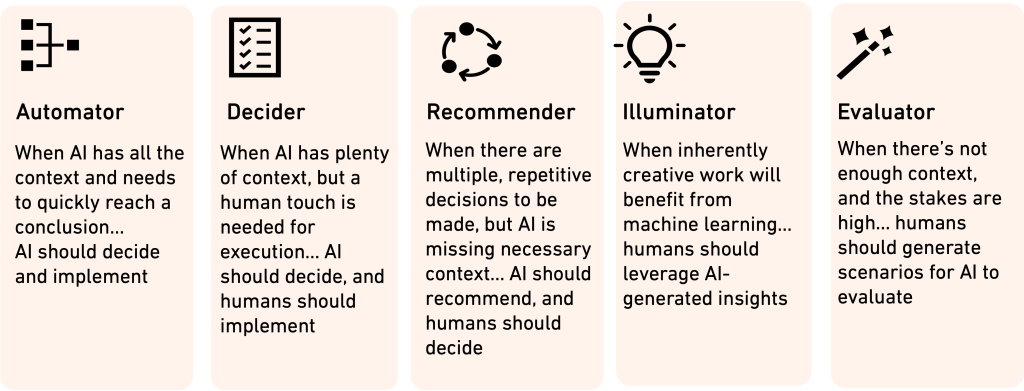

Several years ago BCG set out a simple framework for understanding the role that AI can play across an organisation, and recognising the subtle balance between AI and human capability that's needed.

It's high-level but I've always liked this as a way of understanding the role of AI, not least because it accounts for different contexts. Situations which are characterised by known knowns, relatively stable environments, repetitive tasks, and where the AI can draw on extensive data and/or knowledge and experience can be automated. But as we move left to right the AI has less and less context to work with (perhaps because of a lack of data, or high variability, newness and complexity) and so the level of human intervention increases. These kinds of subtle considerations are going to become more and more important for organisations looking to understand the right approach to designing for agentic application.

In his post Lee also talks about how internal functions and processes need to be re-imagined as services (which are composable, and likely to be at least partially automated) so that other teams can access what they need as and when they need it via internal platforms. This reminded me of Amazon's Service-Oriented-Architecture (SOA) which I wrote about in my second book. As far back as 2002, Jeff Bezos issued an infamous mandate concerning how software was to be built at Amazon. Each team was to expose their data and functionality through service interfaces (APIs) and teams had to communicate with each other through these interfaces. The externalisation of these APIs formed the basis of Amazon's thinking around AWS and B2B externalised infrastructure and services (having third parties utilise your services through APIs adds scale and competitiveness but also generates revenues). But this service-oriented-architecture also brought another level of efficiency to internal collaboration. Reimagining functional and team outputs as services enables a SOA where intelligent multi-agent systems can catalyse cross-team collaboration and operate with minimal dependencies or blockers to delivery.

Yet these systems need to be deliberately designed, modelled and controlled. The potential here, if we get it right, is for the integration of AI agents to empower humans to step away from repetitive, low-value tasks and focus on areas where creativity, empathy, and critical thinking are indispensable. This reallocation of roles positions organisations to solve complex challenges where human ingenuity is critical, but to have that ingenuity and judgement super-charged with AI. AI agents should be workforce multipliers, empowering small teams and individuals to achieve great things.

The implications for organisational design, team structures, resourcing, workflows, job roles are huge. One thing is for sure - there's a whole bunch of pretty fundamental change coming our way.

BCG image

If you do one thing this week…

This was a wonderful essay on the history and philosophy of Netflix, and it’s impact on TV, Cinema and popular culture (HT Russell Davies). The piece highlights how Netflix is developing content for ‘casual viewing’ (content that people are half-watching whilst doing something else). Ed Cotton followed up by featuring some Bain research looking at inattention and simultaneous media consumption, all of which reminded me of the concept of continuous partial attention from way back. Pair all of that with Daniel Parris’ stats-rich analysis of the broken economics of streaming services.

Photo by Venti Views on Unsplash

Links of the week

The latest Edelman Trust Barometer research (link to summary, actual report is here) is out and there’s some challenging stats in here, including that almost two-thirds of people around the world fear being discriminated against, and just over two-thirds believe that business leaders intentionally mislead people

This is a fascinating paper that applied computational analysis of acting performance to chart the pattern of different emotions through the course of movies (HT Storythings)

One thing that’s been niggling me for a while is the thought that we shouldn’t have endless new AI models to choose from (what actually IS the difference between ChatGPT o1 and 4o?) but rather that the AI tool itself should decide which tool is best to serve my need/question. This week Ben Evans wrote a thoughtful post on whether better AI models are actually better

‘Classical music is like a grand, magically infinite palace, to which I have a golden ticket. I know how to get to the places within that I love. But there are so many different, stunning rooms and vistas in this palace that I can wander through it forever and never feel like I’ve exhausted its wonders’. I loved this post from Ian Leslie (his Substack is very good) about getting into Classical Music. This is something that I’ve tried a few times but never truly managed. Ian makes a compelling case for why it’s worth the effort and then has ten great tips for how to go about it (including recommendations for pieces that are a good starting point). This inspired me to want to learn more about it.

‘There’s something strangely comforting about the idea of disappearing. It’s not about running away from problems; rather, it’s about finding a quiet place where I can simply be.’ This, from Remi, was one of the most shared Medium stories of 2024, talking about why it’s okay to press pause, retreat into the comfort of solitude, and exist without the need for validation from others.

This week I started a new project, providing updates and opinions on the latest developments on AI in commercial film and post-production for the Advertising Producers Association (APA), and it led me down a whole AI artist rabbit hole. I discovered the work of Kelly Boesch (TikTok, YouTube, Instagram) who produces AI video art using Midjourney to create images which she then animates in Runway and soundtracks using the AI music creation platform Suno. This film of hers is a particular favourite.

Some very useful AI prompt techniques here related to writing content, including how to use AI tools for proofreading, generating hooks for social media, and drafting emails. The persona, task, steps, constraints prompt process is similar to the approach that I use for prompting and it step changes the quality of results you get.

Quote of the week

“I have a foreboding of America in my children’s or grandchildren’s time–when the United States is a service and information economy; when nearly all of the manufacturing industries have slipped away to other countries; when awesome technological powers are in the hands of a very few, and no one representing the public interest can even grasp the issues; when the people have lost the ability to set their own agendas or knowledgeably question those in authority; with our critical faculties in decline, unable to distinguish between what feels good and what’s true, we slide almost without noticing, back into superstition and darkness.

And when the dumbing down of America is most evident in the slow decay of substantive content in the enormously influential media, the 30-second sound bites now down to 10 seconds or less, lowest-common-denominator programming, credulous presentations on pseudoscience and superstition, but especially a kind of celebration of ignorance.”

Unbelievably, this was written in 1995 by Carl Sagan, in his book The Demon Haunted World: Science as a Candle in the Dark

And finally…

This was a really lovely application of Generative AI - using an LLM trained on GoodReads to find your next favourite book. (HT Storythings)

Weeknotes

This week I was delivering more virtual early morning sessions for my banking client in the Middle East and I also nipped down to Cardiff to deliver a bespoke version of my IPA Advanced Application of AI in Advertising for an agency there. Fun fact about Cardiff - it has more hours of daylight than Milan. Next week I’ll be out in the Middle East working face-to-face with said banking client and prepping for some interesting things coming up the week after. More on that next week.

Thanks for subscribing to and reading Only Dead Fish. It means a lot. This newsletter is 100% free to read so if you liked this episode please do like, share and pass it on.

If you’d like more from me my blog is over here and my personal site is here, and do get in touch if you’d like me to give a talk to your team or talk about working together.

My favourite quote captures what I try to do every day, and it’s from renowned Creative Director Paul Arden: ‘Do not covet your ideas. Give away all you know, and more will come back to you’.

And remember - Only dead fish go with the flow.