Fish Food: Episode 640 - On AI model collapse and the era of experience

Avoiding generative inbreeding, how AI will change advertising, the BBC on next gen media, McKinsey on AI in strategy, and my favourite moment in classical music

This week’s provocation: Avoiding ‘generative inbreeding’

Will AI end up eating itself? What happens when AI models run out of human generated inputs and are trained on content created by AI? Is there a risk that AI models trained on AI slop become ‘inbred’ and get into a self-reinforcing death spiral of reducing quality? So many questions.

AI model collapse refers to the progressive degradation of generative AI models when they are trained predominantly on data generated by other AI systems. This may seem like a fanciful idea at this relatively early stage of AI model development but it potentially presents a significant risk that may become very real sooner than we think.

A study published in Nature highlighted that indiscriminate use of AI-generated content in training can cause irreversible defects in models, leading to a loss of diversity and the disappearance of rare but important data patterns. Recursive training of this kind leads to a feedback loop where errors and biases are amplified over successive model generations, resulting in a decline in performance and outputs that are less accurate, less diverse, and increasingly detached from real-world data.

Model collapse matters for a few key reasons:

Erosion of data quality: As AI-generated content proliferates online, an inability to distinguish between human and machine-generated data could well lead to a decline in input diversity and overall AI performance.

Innovation stagnation: Models trained on homogenized data may fail to capture the richness and variability of human language and creativity, resulting in outputs that are repetitive and lack novelty.

Reputational, societal and operational risks: Increased misinformation or biased outputs from collapsing models could lead to consumer mistrust, bias and reputational harm.

In this opinion piece Louis Rosenberg points out that even if we solve the inbreeding problem, a widespread reliance on AI could be stifling to human culture because GenAI systems ‘are explicitly trained to emulate the style and content of the past, introducing a strong backward-looking bias’. If systems are not designed to avoid model collapse we may well see increasingly repetitive or generic outputs, rising error rates, and a proliferation of misinformation and bias. Sturgeon’s Law, coined by science fiction writer Theodore Sturgeon, states that ‘90% of everything is crap’. It reminds us that mediocrity is common across all fields and the art to making any kind of dent on the universe is to elevate ourselves out of the mediocre and into the exceptional 10%. Perhaps the biggest risk from widespread model collapse is that this 90% becomes closer to 100%.

Of course there are strategies that businesses and AI developers can take to mitigate generative inbreeding: a ‘human-in-the-loop’ approach to ensure diverse and authentic training data and deliberate use of human-generated inputs in hybrid models that can benefit from the efficiency of AI while retaining the creativity and contextual understanding of human contributors; systems to track the origin of data used in training; and involving human reviewers to assess and correct AI outputs.

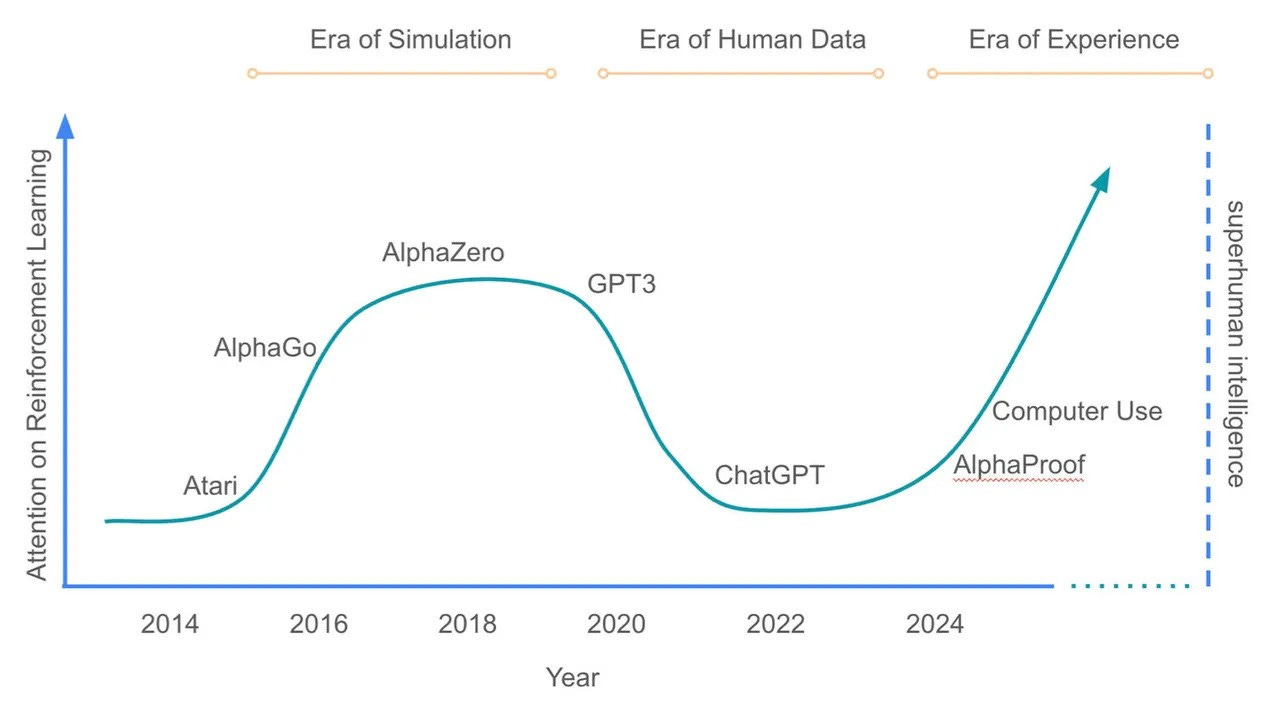

One of the more ‘out there’ ideas to mitigate collapse is to consider integrating embodied AI systems that learn from real-world interactions (such as robotics) to gather ‘authentic’ data and experiences. Scholars at Google Deepmind are already talking about how AI training data is too static, and is restricting AI’s ability to reach new heights. In a paper posted last week (PDF) they argue for more focus on reinforcement learning - a type of machine learning where an agent learns to make decisions by interacting with an environment, receiving rewards or penalties for actions, and optimising its behaviour to maximise cumulative reward over time.

It’s been almost a decade since the AI model AlphaGo defeated world champion Lee Sedol in a best-of-five Go match (Go being the complex Asian game). AlphaGo and its successor AlphaZero both used reinforcement learning through self-play to become better than the best human at something specific. But this form of learning was restricted to limited applications like games where all the rules are known. Gen AI models are different in that they can deal with spontaneous human input without explicit rules to determine outputs.

The authors of the paper say that discarding reinforcement learning has meant that ‘something was lost in this transition: an agent's ability to self-discover its own knowledge’. LLMs, they say, rely on human pre-judgement at the prompt stage which is limiting. They argue that AI should be allowed to have ‘experiences’ and interact with the world to create goals and understanding based on the knowledge it gains from interacting with dynamic environments. They call this the ‘era of experience’.

This ‘streams’ approach, as they call it, would flip AI model inputs from learning from static datasets to continuous experiential learning and, they argue, better equip AI to deal with complex, fast-changing scenarios and environments. By grounding AI learning in real-life experiences, this approach aims to preserve data diversity and authenticity, mitigating the risks associated with recursive training on AI-generated content.

It’s an interesting idea that would seem to take current capability to a whole different level. But so many questions.

Rewind and catch up:

Is it worth learning how to prompt?

Being more intentional in the age of AI

Changing how the game is played

Photo by Brian Kelly on Unsplash

If you do one thing this week…

‘I remain convinced that every AI tool claiming to “make ads” has been built by people with no actual experience working in advertising’. Tom Goodwin’s new column on how AI is likely to change advertising in a multi-stage way, rather than all at once, is worth reading. Like him, I dislike lazy-endism, and think that transformative change rarely happens overnight.

Photo by Joe Yates on Unsplash

Links of the week

This was a fascinating post from BBC R & D on the forces shaping the media landscape for the next generation, featuring a ‘5i’s framework (Internet-only, intelligent, intermediated, interactive, immersive). Lots of good thinking. (HT Pete Marcus, Delphi)

Despite predictions that a wide range of roles will soon disappear due to AI we’ve yet to see the real impact. But analysis of US jobs data shows that two particular jobs have already seen sharp falls - writers and computer programmers. As this FT short video suggests, this indicates that what AI struggles with is not intellectual difficulty but messy workflows. Roles that involve high levels of collaboration, unpredictability, changing contexts, and juggling priorities are difficult for a machine to replicate.

Meanwhile Anthropic expects AI-powered virtual employees to begin ‘roaming corporate networks’ in the next year

And Mustafa Suleyman, Microsoft's AI division head, has revealed plans for AI companions that adapt to individual users.

Speaking of Microsoft, an interesting study from Microsoft research and Carnegie Mellon on the impact of GenAI on critical thinking shows that confidence in AI negatively correlates with critical thinking, while self-confidence has the opposite effect (TL;DR summary of the key findings here)

(Friend of ODF) Mike Scheiner has an interesting post on asking better questions when using AI - I liked his reference to McKinsey’s five roles that AI can play in strategy development

This was an interesting Forbes piece on prompting for newer models (like ChatGPT 4.1) but it was a good reminder of OpenAI’s recommended approach to prompting for deeper research (Role and objective, instructions, reasoning steps, output format, examples, context, final instructions)

Staying with prompting, I liked this idea about getting the AI to prompt YOU rather than the other way around (HT Zoe Scaman)

'Confidence is impressive, but enthusiasm can change people’s lives.' Amen to that. From this lovely post by Tina Roth-Eisenberg

And finally…

This week, for no reason in particular, I was reminded of this amazing moment. Replacing another musician at a lunchtime concert, pianist Maria João Pires was expecting to play Mozart's Piano Concerto No. 23 in A major. The video begins at the moment the orchestra start playing and she experiences every musician’s worst nightmare, realising that she’s learned the wrong concerto and it’s actually No. 20 in D minor. What she does next is incredible.

Weeknotes

This week I was mostly putting together my next quarterly digital marketing trends piece for Econsultancy (webinars are next Thursday if you’re interested) and I also ran an AI in marketing training session for a marketing team in London. Next week I’ll be keynoting the CIPD Change Management conference, doing said webinars and running an all day workshop on AI with leaders from my travel client in Derby. Fun fact about Derby - it was the site of the world's first factory and England's first public park.

Thanks for subscribing to and reading Only Dead Fish. It means a lot. This newsletter is 100% free to read so if you liked this episode please do like, share and pass it on.

If you’d like more from me my blog is over here and my personal site is here, and do get in touch if you’d like me to give a talk to your team or talk about working together.

My favourite quote captures what I try to do every day, and it’s from renowned Creative Director Paul Arden: ‘Do not covet your ideas. Give away all you know, and more will come back to you’.

And remember - only dead fish go with the flow.

Love. And agree. You may appreciate https://davidarmano.substack.com/p/intelligent-experiences-by-design