Fish Food 651: Using AI as a thought partner

Challenging assumptions through AI, context engineering, GEO, using AI for category entry point research, and a random English place name generator

This week’s provocation: Thinking differently using AI

A lot of the focus on the benefits of AI are (perhaps naturally) focusing on efficiencies right now. But one of my favourite ways of using these tools is to challenge my own thinking, to open up new lines of exploration, and to originate new perspectives and ideas that I hadn’t even thought of.

If we are to avoid what has become known as ‘cognitive debt’ (the idea that we grow lazy in our thinking by outsourcing it to AI) we need to really systematise approaches and habits that combine AI with human cognition in ways that generate new possibilities, not just make the most of the ones that already exist. For businesses, the competitive advantage of the future won’t only be about who can be the most efficient and productive, it will be about who can innovate and think in creative ways. And so we need to think about AI in that way as well.

But at a personal level, this is also about how it can help me to get to places that I couldn’t have otherwise got to on my own. And I’m finding it hugely beneficial in doing just that. So I thought I’d set out some of the ways in which I try and systematise this. I’m not a fan of ‘magic prompts’ as you probably know, but I have included example prompts here which may help kick start the process:

Using perspective triangulation

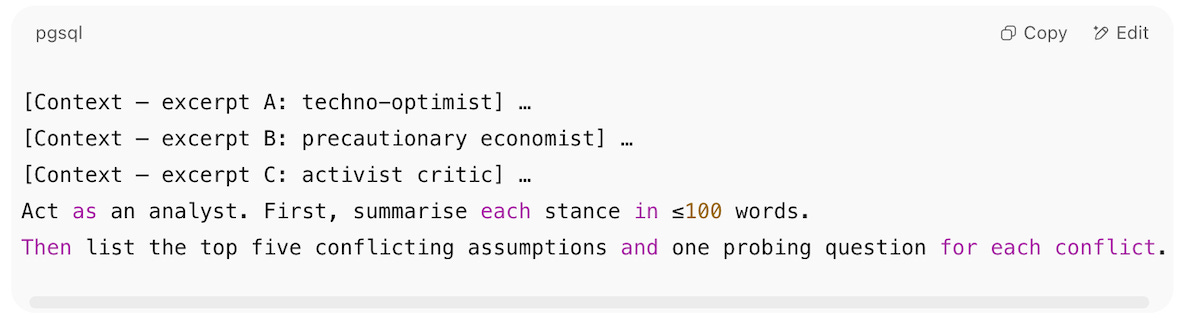

In this Ian Leslie podcast the brilliant Jasmine Sun talks about creating a ‘syllabus’ for the AI to learn from, and it’s definitely a useful technique to carefully curate a collection of documents/studies/information which the GPT can use as source material. For example, you can collate this into the ‘project files’ section of ChatGPT (or Claude) projects or a Google Drive folder and then set up a Gemini Gem which can use this folder for context (more about how I use AI Project spaces in strategy here). These features have persistent memory which is wonderfully useful for deeper dives into a topic. Once you have this you can then surface blind spots by forcing the model to contrast clashing viewpoints - the best way I’ve found to do this is to ask the GPT to set out 3-5 short excerpts that take clearly different positions on your topic (e.g. a status-quo defender is great at surfacing reasons why change may not happen, an optimistic viewpoint helps shows what’s possible, a pessimistic one reveals potential barriers). Then ask it to list the key assumptions or questions behind each (ChatGPT visualised this for me below).

Anchoring the model in explicit tensions pushes it to reason across boundaries rather than averaging them.

Board of Brains

There’s another technique shared recently by Lani Assaf at Anthropic which I also really like - creating a ‘Board of Brains’, or setting up Claude (or other) Projects that can act as independent advisors or challengers, each bringing their own different perspective. To do this you create a new Project ("[Person's Name] Brain"), upload as much context as possible (e.g. public writing, podcasts), and add some project instructions ("You embody [Person/Role]'s perspective. Review my work through their specific lens. Flag what they'd notice. Suggest what they'd recommend. Be direct."). This could be respected individuals with an interesting point of view, or experts, or customer personas, but I liked what Lana said about using this technique:

‘What’s really cool about using Claude consistently in this way is the compounding effect. Over time, these other perspectives become interwoven with yours. You’re systematically borrowing someone else's lens until it becomes part of how you see.’

Role-play debate

Another similar technique is to simulate a live debate between people with different perspectives. Here you give the GPT a cast list, tell it to speak in numbered turns, giving one paragraph on a topic per role. You can then pause after each cycle and ask follow-up questions, go deeper on specific arguments or add a new ‘guest’. Seeing arguments collide in real time really opens up your mind to new perspectives and lets you notice assumptions you didn’t know you were making.

Other prompting techniques

I wrote a whole thing a while back about challenging your thinking through prompting but I’m going to highlight a couple of favourite techniques from there and also some new ones that I’ve started using. You can, of course, include in your prompts a deliberate instruction to challenge common assumptions, avoid typical biases or echo-chamber thinking in the area. You can ask it to play devil’s advocate and force you to justify your thinking, or to flag when you are being vague and ask clarifying questions. You can even set the whole GPT up to always avoid lazy thinking (e.g. in ChatGPT this is in Settings-Personalisation-Custom Instructions). But there are also some more specific and deliberate techniques:

Norm switching: explore the norms from a completely unrelated context (e.g. a totally different sector) and apply them to your context (e.g. your own sector)

Constraint reversal: sometimes if you challenge the model with a ‘ridiculous’ constraint (‘how would you do X with no budget’) and ask it for five solutions based on that it can jolt it out of its pattern and force lateral moves the model wouldn’t surface under standard feasibility filters.

Counterfactual storyboard: this is useful for identifying the potential fragility of existing plans. Ask the model to create a short narrative in which the opposite of a key assumption is true, and then examine how the system adapts or fails. For example: ‘Imagine that instead of expanding, our target market shrinks by 50 % in two years. Show the sequence of events month by month and identify three leverage points we could still exploit.’

Forced analogy engine: here you preload a list of different domains which could be completely random (like principles of acting, or conservation practices, or jazz improvisation), or it could be examples of businesses that are particularly good at a specific thing (like Apple for product design). Then you tell the GPT to map each to your challenge and extract at least one transferable principle.

Negative inversion: Ask something like ‘list ten ways this initiative could fail spectacularly’, and then invert each item into a preventative or mitigating action, or a new idea. This can help reveal and prevent potential points of failure but also open up new possibilities once you invert it.

Ladder up/ladder down: this is about toggling between the big-picture ‘why’ and the more executional ‘how’. Write your starting challenge in as succinct a way as possible. Then ladder up from that one abstraction level higher (‘Why does this matter?’), and do it a couple more times or until the answer starts becoming vague. This can help reveal implicit purpose and higher-order goals. Then go back to your original challenge and ladder down (ask ‘What’s the very first observable action?’, and then ‘What must happen immediately before that?’), and do that a couple more times. This helps reveal practical dependencies and hidden blockers. You can even compare the two ladders to see where the strategic intent (top) fails to translate into concrete actions (bottom). That gap is an interesting area to focus on. If it helps, I created a cheat sheet on this with a simple example which you can download here.

Using an AI tool like a search engine means that you are potentially missing a huge opportunity for them to open up new lines of exploration and to take your thinking and ideas to places that you couldn’t have got to on your own. I believe that intellectual curiosity will only become more important in the era of AI. It’s about seeking out the right answer, not just the one in front of you. It’s what separates lazy thinking from deeper deliberation, and mediocre outputs from exceptional ones.

Rewind and catch up:

Have we got personalisation all wrong?

Complexity bias in AI application

Gigantomania and why big projects go wrong

Superagency: Amplifying Human Capability with AI

Photo by Glen Carrie on Unsplash

If you do one thing this week…

I need to write more about context engineering - the choices you make around the data and information that you give to an AI to make decisions. In marketing you might define it as the deliberate design of the environment and inputs AI systems use so that outcomes consistently favour a brand’s visibility, relevance, and influence. In an enterprise context it’s the curation and organisation of information that different AI engines and agents can work from. As Ethan Mollick has said, understanding the kind of context that the AI needs to do an exceptional (and not just average) job is increasingly critical:

‘"Context" is actually how your company operates; the ideal versions of your reports, documents & processes that the AI can use as a model; the tone & voice of your organization.’

This recent post is an excellent primer on context engineering.

Links of the week

This was a useful demonstration of how to do ‘quick and dirty’ synthetic category entry point research from the APAC Head of Content Solutions at LinkedIn. Synthetic research, whilst no substitute for human research, is fascinating. Firestarters guest Simon Carr wrote a piece this week about how he’s using it.

And this, from Jakob Nielsen, is the best and most comprehensive guide to GEO (Generative Engine Optimisation) that I’ve read so far (HT David Armano)

According to BCG’s latest ‘AI at Work’ survey, over half of workers would use AI without company approval (I’ve seen this a lot), and only a third think that they’ve been adequately trained in how to use the tools (HT Bruce Daisley)

A journalist tried Search Live, Google’s new feature which allows you to hold real-time voice conversations with an AI-powered version of Search, and ended up getting into a philosophical debate about fiction books with the AI

This was actually a great visualisation of how LLMs really work - technical without being too technical if you know what I mean

And finally…

‘I was looking at some English placenames recently and thought they all sounded made up, and thought it would be fun to make a thing that makes up English place names — so that's exactly what I did’. Nice one Alex Torrance.

Weeknotes

Hej. Sending this from Stockholm where I’m doing some work with Trinity Business School and a group of Brazilian Credit Cooperative CEOs (first time I’ve delivered a working session in Portuguese - live translation, not me, you understand). Stockholm really is one of my favourite cities, but sadly I’ll be flying straight out and back to the Middle East to do more with my banking client out there. This time is a new one for me though - a session with a group of product leaders on using AI in product innovation, so looking forward to seeing how it goes.

Thanks for subscribing to and reading Only Dead Fish. It means a lot. This newsletter is 100% free to read so if you liked this episode please do like, share and pass it on.

If you’d like more from me my blog is over here and my personal site is here, and do get in touch if you’d like me to give a talk to your team or talk about working together.

My favourite quote captures what I try to do every day, and it’s from renowned Creative Director Paul Arden: ‘Do not covet your ideas. Give away all you know, and more will come back to you’.

And remember - only dead fish go with the flow.

I really appreciate this post. I've been using AI in a similar way. I'm actually channelling ChatGPT and Claude and giving them different personalities. However, what you've added here adds greater depth to it, which I'm going to go and play with. Thank you.

Thank you for the insights and very useful examples.