Fish Food: Episode 649 - Complexity bias in AI application

Simplicity and clarity as a strategy in AI, radical org design, phones vs TV, the reality of modern work, Deep Research and why it's not a good idea to wake up with the news

This week’s provocation: Simplicity and clarity as a strategy in AI

For 17 years, scientists at Australia’s Parkes Radio Telescope were chasing mysterious radio blips, nicknamed perytons, that stubbornly defied explanation. First detected in 1998, these fleeting signals closely resembled fast radio bursts (FRBs), which some excitedly speculated were astrophysical in origin. But they didn’t behave like anything out in space. They appeared only during the daytime, once or twice a year, and were always picked up when the telescope pointed in a specific direction.

Initially, researchers suspected that local atmospheric interference, perhaps lightning, could be the source of the mysterious signals. That theory persisted for many years until early 2015, when a new radio‑frequency interference (RFI) monitor was installed. After years of searching the astronomers identified the real source of the mysterious signals – a microwave oven in the facility’s break room.

The astronomers were operating the telescope remotely but there were a small number of maintenance staff on site. When the microwave oven was set to heat something up but then was opened part way through (perhaps to check on the contents) it emitted signals that the telescope was able to pick up when it was pointing in the general direction. Years of mysterious signals originating from a maintenance worker heating up their lunch.

As humans we often prefer complicated solutions over easy ones (complexity bias) and we can miss what’s right in front of us. In working with and applying AI I think we’re at risk of falling foul of complexity bias in some key areas, from prompting to products to AI systems.

Prompting

In prompting for example, over on LinkedIn right now there’s a lot of people pushing the idea of complex ‘magic prompts’. You know the kind of thing: ‘cut and paste this 16-page prompt into ChatGPT to skyrocket your strategy’ (yep, I did actually see someone promoting a 16 page prompt). Magic prompts may short cut the process but this ultimately means that we don’t learn how to work with AI in our own way. They also overcomplicate the prompt itself by trying to include every possible nuance in the very first attempt.

LLMs understand words as sequences of numbers (or tokens). Each token is mapped to a vector, a set of numerical values representing its meaning based on usage patterns across billions of sentences. The model doesn’t ‘know’ what a word means, it just knows what kinds of words are likely to follow it, based on statistical relationships in its training data. So while a human might intuit the subtle difference between similar meaning words an LLM needs cues from context, sentence structure, or examples, to make that distinction.

For this reason, spending a little time being clear about what it is we’re actually trying to do rather than going straight to ChatGPT always pays dividends. Francois Grouiller’s ‘Think-Prompt-Think’ approach for example, starts with a human focus on framing the right question to ask and how to express it with clarity. Magic prompts may answer the question, but it could well be the wrong question.

AI product strategy and innovation

Not everything is an AI-shaped challenge. Sometimes teams rush to use generative AI or LLMs for problems that a well-designed rule-based system or a structured database query could solve faster and more reliably. AI is a powerful tool, but not always the right one, and sometimes the best AI strategy is not to use AI at all, or at least to apply it more lightly than instinct suggests.

Complexity bias leads us to add things rather than take them away. The risk of an AI-is-the-answer-to-everything assumption is that the team develop complex AI solutions for things that could be done in much simpler ways. Writer Craig Mod once compared this desire to add complexity to the time Homer Simpson was asked to design a car:

‘When Homer Simpson was asked to design his ideal car, he made The Homer. Given free reign, Homer’s process was additive. He added three horns and a special sound-proof bubble for the children. He layered more atop everything cars had been. More horns, more cup holders.’

In product design, says Craig, the simplest thought exercise is to make additions, and it’s the easiest way to make the old thing feel like a new thing. Adding AI feels like it’s a forward-thinking thing to do but if we’re simply adding complexity we are in danger of ‘doing a Homer’.

AI explainability and trust

In AI, explainability matters. Making the internal workings and outputs of an AI model or system transparent helps to build trust and accountability, and can empower users to dispute or alter AI-driven decisions. The opposite of explainability is opaque, complex systems that no-one trusts and no-one can navigate.

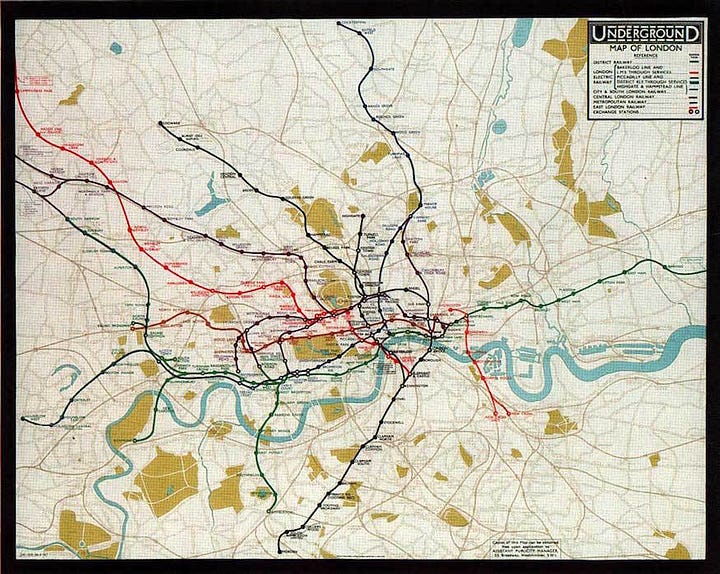

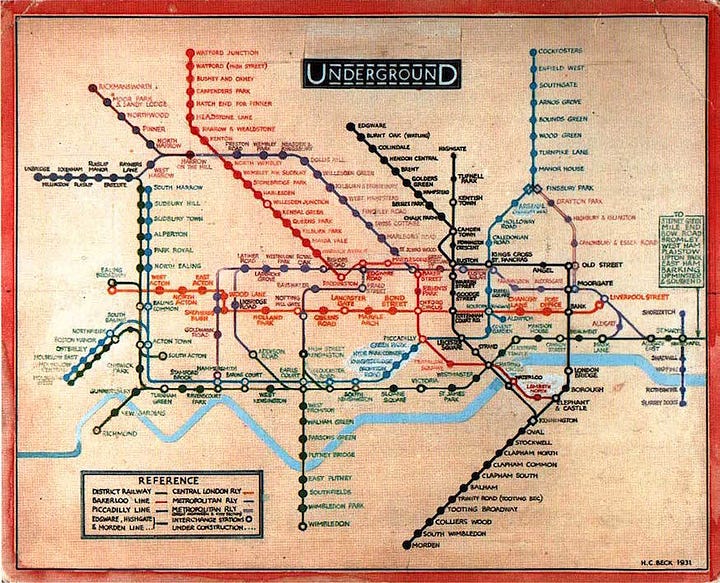

The first London Undergound maps in the early 20th Century (left, below) tried to represent stations in a geographically accurate way so that it could be transposed onto an above ground street map. It was believed that being geographically correct would help underground users to navigate the system but as a map it was crowded, visually overwhelming and confusing to use.

Henry Beck, an engineering draughtsman who had lost his job at the Underground Electric Railways Company of London (UERL) due to budget cuts, decided to redesign the map. He set about ‘straightening the lines, experimenting with diagonals and evening out the distance between stations’. In 1931 he submitted his work to the UERL (right, above), who promptly rejected his ‘revolutionary’ map. Fortunately for us he persisted and the map was eventually published, becoming an instant hit.

Beck’s map was abstract, schematic, and geographically ‘wrong’ but it was beautifully, radically simple. He had understood that people didn’t need to know where stations were in the real world, just how to get from one to another. It was the simplicity that made the system legible.

Learning from Henry Beck, we need to design AI systems in ways that make them easy to understand, and in ways that are closely aligned to how people want to meet their needs. In his epic presentation on AI trends, Benedict Evans made the point that many people in organisations are being told to use AI but they don’t actually know what they should be using it for. Quite apart from the mystifying lack of training and support that staff are generally getting, ensuring good explainability and understanding is crucial to building trust in these systems. In this context simplicity isn’t just elegant, it’s operationally smart.

Rewind and catch up:

Gigantomania and why big projects go wrong

Superagency: Amplifying Human Capability with AI

Image: CSIRO, CC BY 3.0

If you do one thing this week…

I’m fascinated by flatter, more agile org design (I wrote about a few examples of this in my books), and this week journalist Aimee Groth shared a few insights into Bayer’s transformation into a Dynamic Shared Ownership (DSO) model which replaces traditional hierarchy with ‘thousands of self-directed teams working in 90-day sprints’. Designed to decentralise decision-making, speed up innovation, and shift accountability downwards there is added pressure on the transformative org design created by the need to save $2Bn by the end of 2026 to turn the business around. One of my favourite bits:

‘We’re also replacing things like the annual budget process. Instead of spending three to five months a year doing this big bureaucratic exercise, people now spend one day every 90 days asking: How did we do in the last 90? What are the most important objectives for the next 90? Do we have the right team? Do we need to add or move people? Then we work for 89 days and do it again.’

In each cycle, 10–15% of employees change roles. There is short interview with Bayer CEO Bill Anderson is featured in NYT Dealbook (you need to scroll down a bit to see it), and you can read more about the origins here and here). Over the years I’ve done a fair bit or work with Roche, who have also implemented an ‘agile-at-scale’ approach but it’s energising to watch CEOs taking such a transformative approach.

Links of the week

The new IPA Touchpoints survey results were heralded with the (well-shared) headline that for the first time people in the UK now spend more time on their mobiles (3 hours 21 mins) than watching TV . But there was an interesting nuance to the findings that was much less widely reported but which was highlighted by David Wilding: "TouchPoints also tracks emotional states throughout the day. The data reveals that British adults are 52% more likely to feel relaxed when watching the TV set compared to viewing video on a mobile phone. Conversely, viewers are 55% more likely to report feeling sad when watching video on a mobile phone versus on a television set"

Some stark statistics on the modern work environment in this summary of the key findings of that Microsoft workplace report which I featured a few weeks back - it was all in there but seeing it all laid out like this brings it home the reality of what most knowledge workers are dealing with - 117 emails and 270 notifications a day, 2 minutes between interruptions, before hours emails and after hours messaging, the most valuable hours of the day often ruled by someone else’s agenda

This 30 minute talk at YCombinator from computer scientist Andrej Karpathy is a good software builders’ view on a future of AI as an extension of human capabilities through semi-autonomous AI agents which, as Nate Jones points out, contrasts with McKinsey’s consultants’ view of an agentic AI as a ‘mesh’ (PDF report) featuring enterprise-wide networks of autonomous agents coordinating seamlessly across organisations.

Google Deepmind released a sneak preview of how Gemini 2.5 Flash-Lite can ‘write the code for a UI and its contents based solely on the context of what appears in the previous screen’. We’re currently in an early stage UI with AI where everything goes through a simple chat interface, but it’s an intriguing thought that user-interfaces are likely to be built on the fly in the future.

This was a good guide to getting the most out of Deep Research. I use ChatGPT and Gemini Deep Research all the time in my work and I’m not sure what I’d do without them now

I liked this provocation about a different lens through which to look at AI implementation - not just the consultant-friendly productivity, efficiency, growth, and competitive advantage, but also ‘Degrowth: AI that helps us live within planetary boundaries, optimising for sufficiency and care.’ and ‘Relational: AI as a partner in collective well-being, not just a tool for outpacing the competition’. Yes.

And finally…

I’ve followed artist and author Austin Kleon for years, and subscribed to his ‘10 things’ newsletter for a good while (for reading and culture tips). This week he shared a short video on why it’s not a good idea to wake up with the news, which feels like apposite advice right now.

Weeknotes

This week I’ve been prepping for an upcoming programme that I’m doing which is seeking to turn smart senior leaders into the Chief AI Officers of the future. It’s an absolutely fascinating project and stretching in a good way. I also did some teaching this week at Imperial College London on their Digital Transformation Strategy programme, and met some lovely people.

Thanks for subscribing to and reading Only Dead Fish. It means a lot. This newsletter is 100% free to read so if you liked this episode please do like, share and pass it on.

If you’d like more from me my blog is over here and my personal site is here, and do get in touch if you’d like me to give a talk to your team or talk about working together.

My favourite quote captures what I try to do every day, and it’s from renowned Creative Director Paul Arden: ‘Do not covet your ideas. Give away all you know, and more will come back to you’.

And remember - only dead fish go with the flow.

Wonderful story on the underground Neil.

One of the many mistakes I made in marketing was not questioning assumptions. I’d read a book and believe it. A senior marketer would say something and I assumed it was true. At some stage I started questioning assumptions. I don’t remember when exactly as it is very natural to me now. Sceptical not cynical is how I describe it. But I can imagine how much damage marketers will create for themselves with AI if they don’t learn how to think critically and question where a stat came from or worse, AI’s layered in opinion that is framed as fact.

Loved that Simpsons episode.